Technical SEO Strategies

Technical SEO refers to optimizing your website to improve the user experience as well as for the search engine. It’s important to get this right as these factors can have a large impact on your site’s search engine rankings.

Issues such as broken links will inhibit the ability of spiders to crawl and eventually index your site which means you’ll never rank. We also know that as of 2018, page speed is a ranking factor in mobile searches as well.

Google wants to help people answer questions, if your site is taking forever to load it will create a poor user experience and therefore Google won’t rank you as high. In this article we’ll discuss how you can go about implementing technical SEO on your site.

Issues we'll cover:

- Security with SSL

- Site Performance and Speed

- Utilizing Sitemaps and Robots.txt files

- Fixing Broken Links

- And more

Technical SEO Techniques

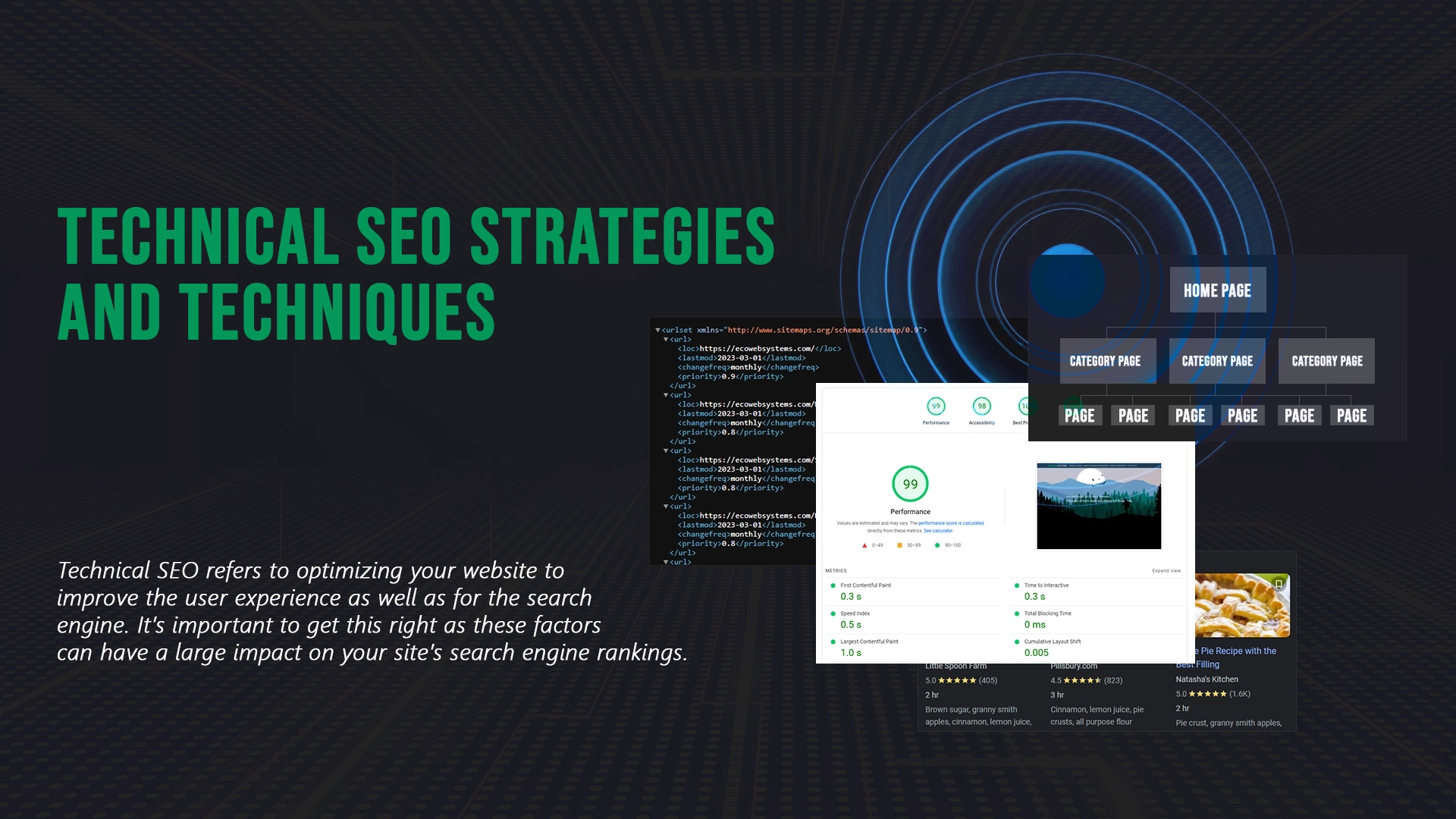

1. Create a Clean SEO-Friendly Site Structure

This refers to the way your pages are linked together within your site. With Technical SEO we’re trying to make it as easy as possible for the bots to crawl your site. One issue this clears up immediately is “Orphan Pages”. These are pages on your site with no internal links to them, making it impossible for the bots to find and crawl. Here’s an example of a clean site structure:

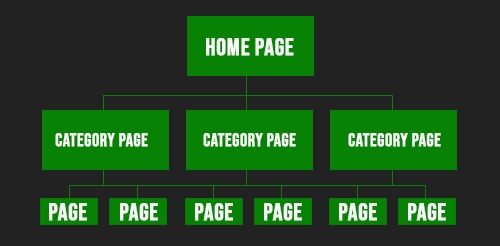

2. Submit Your Sitemap and Create a robots.txt

Once your site structure is cleaned up you’ll want to create an XML sitemap and submit it to Google via your Google Search Console. A sitemap aids Google in finding your webpages. This becomes increasingly important when your site has a lot of pages.

Here’s a snippet of the Ecoweb Systems sitemap:

Once you’ve created your sitemap.xml file, place it in the root folder like this:

yourdomain.com/sitemap.xml

Along with your sitemap you’ll want to add a robots.txt file to your root directory. This file tells the visiting bots who’s allowed to crawl and where they should or shouldn’t crawl. It’s really simple and look like this:

User-agent: "*"

Disallow: /

- User-agent refers to the bots. A “*” means all bots.

- Disallow tells the bots which areas they should NOT crawl. Let’s say you have a site where users log in and

are taken to their account page etc… Generally we don’t want that to be crawled or indexed, so if the account

lived in a directory called “Auth” we would write

Disallow: /Auth/in our robots.txt file.

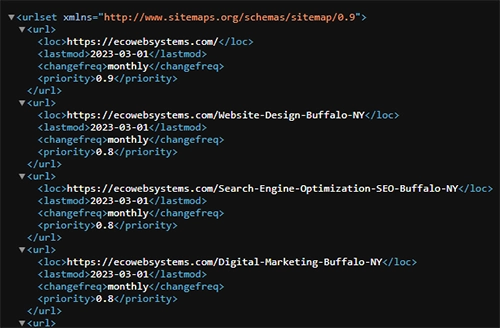

3. Improve Your Page Speed and Performance

As stated above, page speed is a confirmed ranking factor for both desktop and mobile searches. There are several items to be mindful of and a great way to see where you stand and how you can improve is by using Google’s PageSpeed Insights. Here’s what the report for ecowebsystems.com looks like:

4. Mobile Usability

By now you know that responsive, mobile friendly design should be a given. From the layout to font-size, to image sizes, Google wants your site to work great in the mobile world. Google Search Console contains an entire mobile usability section to help you out if you’re not sure or in case you missed something.

You should have Google Search Console by now… right? It’s invaluable, really. Get on that. If you don’t, use this.

5. Use an SSL Certificate (HTTPS)

HTTPS is the secure version of HTTP and it’s meant to keep personal data and information safe when transferred over the web. In 2014 Google confirmed it was a ranking signal and besides, we should all be doing what we can to make the internet safer. If your site currently doesn’t have one you can either ask your hosting provider or head on over to Let's Encrypt for a free one.

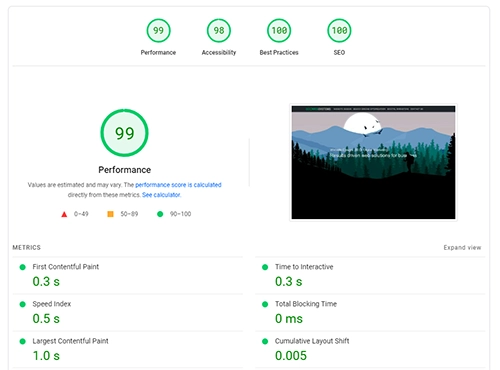

6. Structured Data

Have you noticed how sometimes search results look differently, like for instance when you search for a recipe?

That’s because of something called structured data and Google supports a lot of it. Using this technique you're putting your site in a better position to win those coveted search results called rich snippets.

For some of the best info and help with structured data check out https://schema.org/

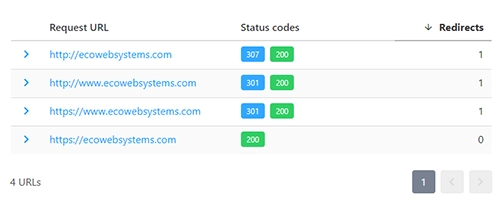

7. Only One Version of Your Site Accessible

You want to make sure bots have only one version of your site to crawl to avoid duplicate content issues. For example,

let’s take these four urls:

http://yoursite.com

http://www.yoursite.com

https://yoursite.com

https://www.yoursite.com

If you don’t have 3 of the 4 pointing to just one of them you need to fix that. You can check this for your site at https://httpstatus.io/. Here’s what you want to see when you run that check:

If yours doesn’t look similar to this you’ll need to set up a permanent 301 redirect. Which brings us to our next technique.

8. Fix Broken Links

It happens, as sites grow, a link to a page that was moved or deleted remains on another page and when a bot goes to crawl that page it hits a dead end. Google frowns on this, so do your visitors. Find them, fix them.

9. Periodical Testing

The best way to stay on top of technical SEO is to run an audit every quarter or so, depending on how much you’re editing you may want to do more frequently. Build yourself a checklist and document who did it, date it and stay on top of things.